Gavin Wood Speech: JAM Delivery Status and the Medium- to Long-Term Strategy for Introducing ZK into JAM!

This article is the English version of Gavin Wood's speech at last month's Web3 Summit! Since the content of this series is quite extensive, we will publish it in four parts. This is the first part, mainly covering:

- The delivery status of JAM

- ZK performance has improved, but is still far from commercial viability

- 33 times recomputation vs mathematical proof: the real cost of two security models

- How much does a ZK-JAM node cost? The answer is 10 times more expensive than you think!

- The short-, mid-, and long-term evolution paths of ZK in JAM

Let’s take a look at the exciting insights Gavin shared!

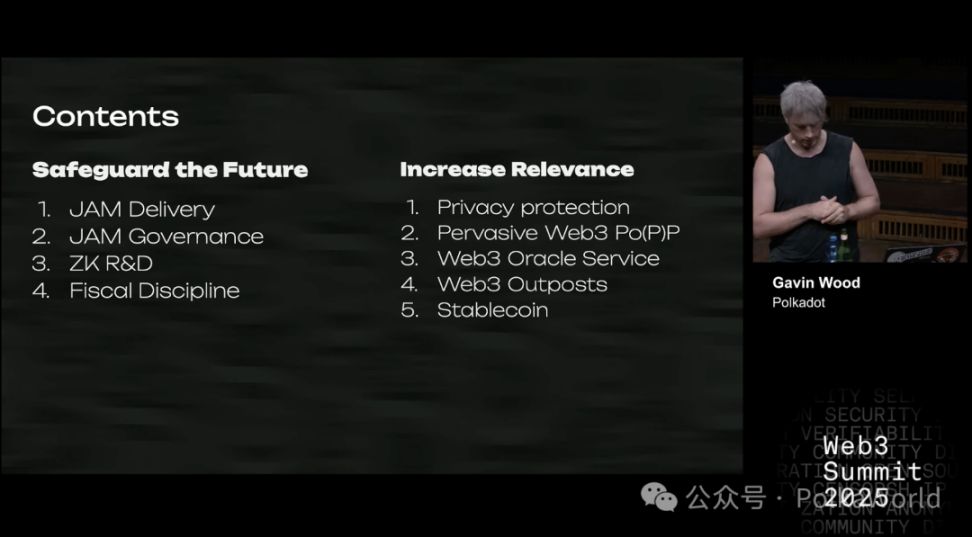

So, without further ado, what am I going to talk about in this speech?

First, I want to share my overall perspective on Polkadot, which is basically my current thinking position—a “snapshot of the present.” You may have already heard of JAM—this is a project I have been researching for a long time, and it is closely linked to Polkadot. We hope it will eventually support the next stage of Polkadot’s development. In addition, I will also talk about zero-knowledge cryptography (ZK), especially its application in expanding blockchain functionality.

Moreover, I will discuss the tokenomics of DOT. Next, I will introduce some new content I have been researching recently, which aims to improve existing capabilities and even bring entirely new possibilities to Polkadot and the broader Web3 world. This part covers multiple aspects—some will be detailed, others just touched upon. Alright, let’s officially begin.

Current Delivery Status of JAM

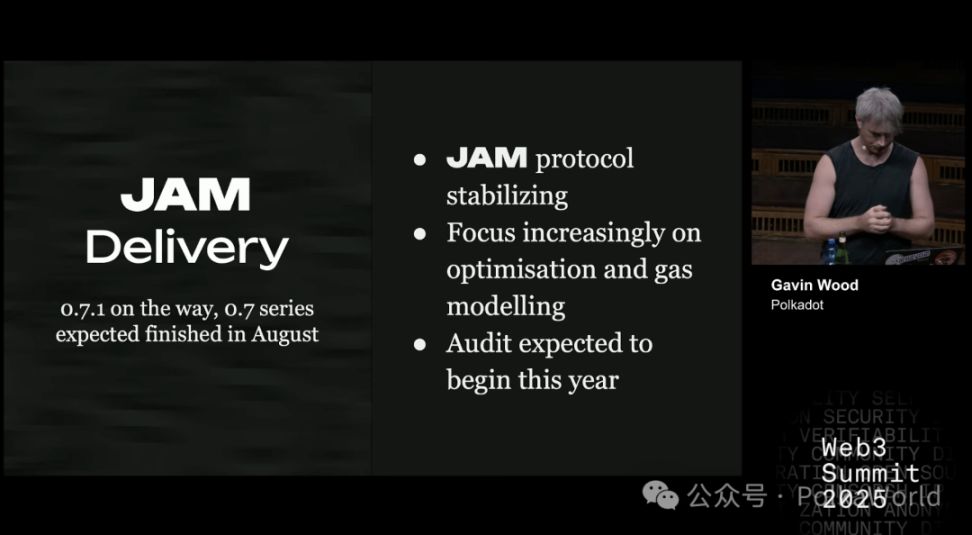

The initial version of JAM is 0.1, and it is gradually approaching 1.0. When it reaches 1.0, it means the JAM protocol is ready for Polkadot to upgrade to. As the protocol stabilizes, our focus is shifting to optimization, especially gas modeling. Earlier this year, I gave a dedicated talk on this topic at the Ethereum conference in Prague (East Prague). Gas modeling itself is a very interesting but also extremely complex topic.

JAM is expected to begin protocol auditing this year. There isn’t much work left in the 0.7 version series; but in 0.8, gas modeling will be officially introduced, and the workload will increase significantly. I expect we can move to version 0.9 this year and officially start the audit then.

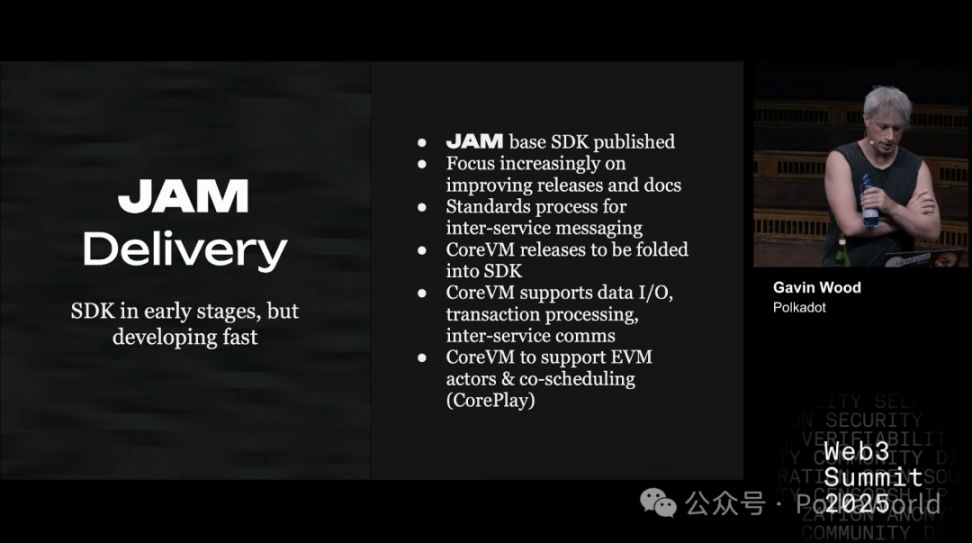

Of course, having a core protocol is one thing, but being able to develop on it is another. You need SDKs, documentation, and other development tools. This part is still in its early stages. Although it is already possible to develop software on JAM, at Parity, I am mainly the one pushing the construction and release of the SDK. However, in reality, this will require continuous investment and refinement over the coming months and even years. Of course, SDK development will not be limited to Parity. I also expect more teams to join in and build their own JAM SDKs.

We have already started formulating standards for cross-service messaging, which can be seen as the JAM version of XCM/XCMP. Meanwhile, CoreVM is gradually becoming part of the SDK and will be continuously improved and enhanced in the coming months. CoreVM already supports many features, such as audio output, video output, data input/output, transaction processing, and upcoming internal services. It does not yet support EVM, but this is a planned addition. Also, the mechanism I previously called coreplay (core collaborative scheduling) is planned to be implemented in the next 12–24 months.

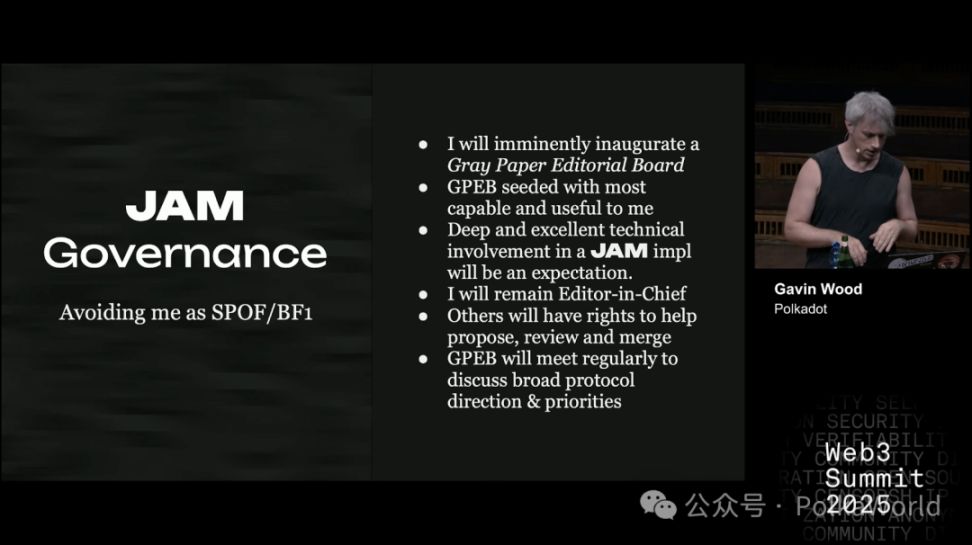

Recently, someone in the JAM chat group raised an interesting question: how can we avoid making myself a single point of failure for JAM? Currently, the evolution of the JAM protocol relies entirely on what I write in the Gray Paper. This means that if something happens to me, the entire project could stall. Clearly, this is not good for JAM or myself.

Therefore, we treat the content of the Gray Paper as the technical specification for JAM. The latest Gray Paper is the latest JAM. If a version of the Gray Paper has been audited, then the JAM protocol it defines is considered production-ready—this is straightforward.

So, in the future, if updates to the Gray Paper are no longer solely decided by me, how will it evolve?

My idea is to establish an editorial committee. The initial members will be those who have truly participated in writing the Gray Paper, have a deep understanding of it, and have made substantial contributions. I expect these members to maintain a high level of technical involvement in the implementation of JAM. I will not completely withdraw and will continue to serve as the chief editor, but I hope to reduce my workload and give others the power to propose, review, and merge changes.

As JAM surpasses version 1.0, this editorial committee will take on higher-level responsibilities:

- Not just handling minor changes, but deciding the direction and priorities of JAM’s development;

- When there are differing opinions, the collective judgment of the committee should be the most authoritative voice.

I plan to appoint a deputy who will take over when I am absent, on vacation, or otherwise unavailable. In the long run, deputies will also be responsible for selecting, inviting, and deciding on new editorial committee members to ensure the mechanism can operate independently. Ultimately, I hope this governance system can gradually become independent, and even involve some external organizations, such as the Polkadot Fellowship.

I do plan to place the Gray Paper under an open license agreement, though the specific one has not been decided yet. It is likely to be a copyleft license, with some clauses to prevent patent abuse.

As for Polkadot’s governance, it has full authority to decide which protocol it runs. Polkadot is a sovereign protocol, and its governance mechanism is the embodiment of this sovereignty. Currently, Polkadot governance has basically made it clear: it wants to adopt JAM. This is good. At the same time, other networks can also choose to use JAM, since JAM is an open protocol.

If JAM continues to evolve in the future, Polkadot can choose to stay in sync and follow the latest version; if it is dissatisfied with JAM’s direction, it can also freeze at a certain version, or even modify the core protocol or directly fork the Gray Paper. This shows that JAM itself is an independent system, and I personally hope it can maintain a mutually beneficial and symbiotic relationship with Polkadot in the long run. Of course, if one day they go their separate ways and develop independently, that is also entirely feasible.

As long as both sides remain aligned, I expect Polkadot governance to actively participate in and support the operation of the Gray Paper editorial committee. If other protocols adopt JAM in the future, I also hope they can participate in a similar way.

Alright, that’s the current progress of JAM, or rather the stage it is about to reach. Next, I want to talk about zero-knowledge proofs (ZK).

ZK Performance Has Improved, But Is Still Far from Commercialization

Many people ask me this question: when will ZK (zero-knowledge proofs) truly become commercially viable?

Ethereum is very enthusiastic about ZK—their roadmap is almost entirely centered around ZK. In JAM, we actually only use a bit of ZK in some special consensus mechanisms for block construction; overall, we do not rely on it. But even so, this is still a question that must be taken seriously:

- When can ZK become a technology that truly expands computational capacity and is commercially viable?

- Is it there yet?

- If not, how much longer will it take?

If you look at materials in the Ethereum ecosystem (such as ethprovers.com), you’ll see some astonishing numbers claiming that ZK is already economically viable. But our investigation found these numbers are not real. The good news is, while it’s not fully viable yet, the gap has narrowed significantly compared to 18 months ago.

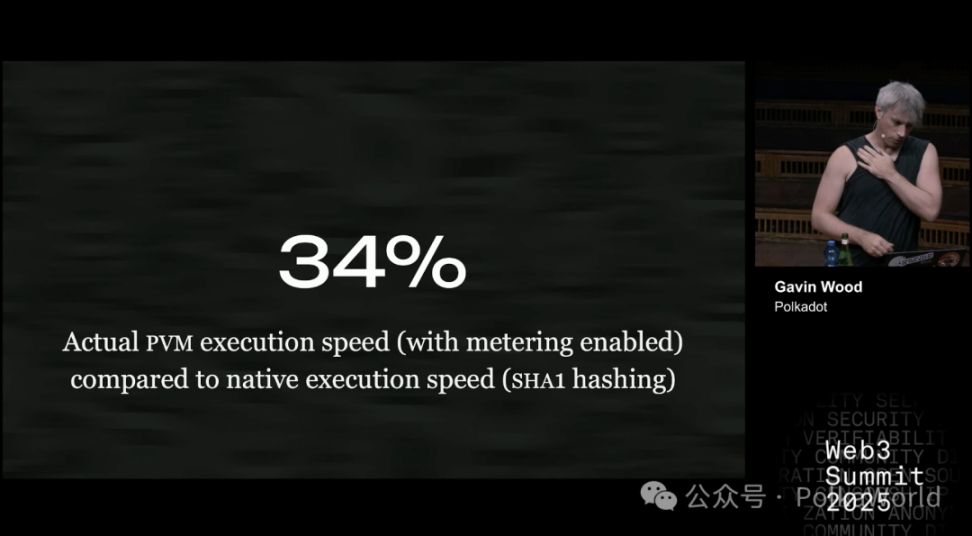

For example: Currently, JAM’s virtual machine PVM (essentially JAM’s version of EVM) is about 34% slower than native execution when running code. In other words, if a program takes 34 minutes to run in a native environment, it would take about 100 minutes on PVM.

This result is actually quite good—we are satisfied with it, and there is still room for improvement.

Of course, in some cases the gap is larger, such as 50% or more. Especially for tasks like SHA-1 hashing, PVM execution is slower. This may be because compilers in native environments can use SIMD instruction sets or other optimizations, which PVM cannot do yet.

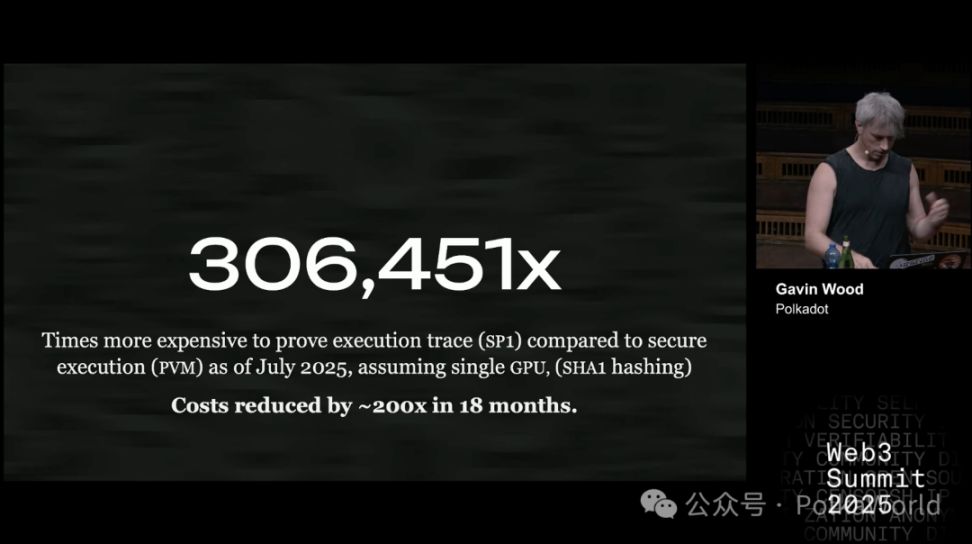

Next, let’s look at another key number: this is the cost of generating an execution proof using the best prover we can find, Succinct SP1—that is, the extra overhead compared to running directly on PVM. Note, the comparison is with PVM, not native. PVM itself is already about 34% slower than native.

Current test results are as follows: we used the latest version of the software and assumed only one GPU (because the public codebase only supports a single GPU). If it were a closed-source commercial version, it might scale to GPU clusters, but in open source, this is the limit. The test content is the same as before—still SHA-1 hashing—for consistent comparison.

So what’s changed?

Eighteen months ago, we did similar experiments, and the numbers were much larger, about 60 million to 64 million. Now, the cost has dropped significantly.

There are probably two reasons:

- On one hand, GPU rental prices have dropped;

- On the other hand, the software itself has been greatly optimized, possibly by an order of magnitude or more.

It’s worth noting that 18 months ago, the prover we used was not SP1, but RISC-0. Regardless, the results show one thing: cutting-edge technology is advancing rapidly, and the progress is considerable.

As of July 2025, using SP1 (Succinct’s prover) to generate a proof for an execution trace is 306,451 times more expensive than securely executing the same computation directly in PVM. In the past 18 months, the cost of proving has dropped by about 200 times, but this number is still huge. ZK technology is indeed progressing rapidly, but it is still far more expensive than direct execution.

Next, let’s talk about gas metering.

Running code quickly is one thing, but the key is that you must be able to trust it. What if someone deliberately writes code to “slow things down”? In consensus mechanisms, if the system must reach agreement within a specified time, but the code is maliciously designed to be slow, the whole system could get stuck or even crash.

On Polkadot, this problem is less prominent because we have parachain slot auctions. That is, the identities of those submitting code to the system are basically known—they spent real money to buy slots, so they are unlikely to engage in destructive behavior that harms others and themselves.

But if we expand the scenario to a more open and general environment, the problem becomes serious.

What’s the solution?

It is to be able to roughly estimate the upper bound of a piece of code’s execution time in advance—that is, how long it will run in the worst case. Then ensure that, no matter what, its runtime will never be slower than this worst case. Otherwise, if someone can make the code 10 times slower than our estimate, that’s a big problem.

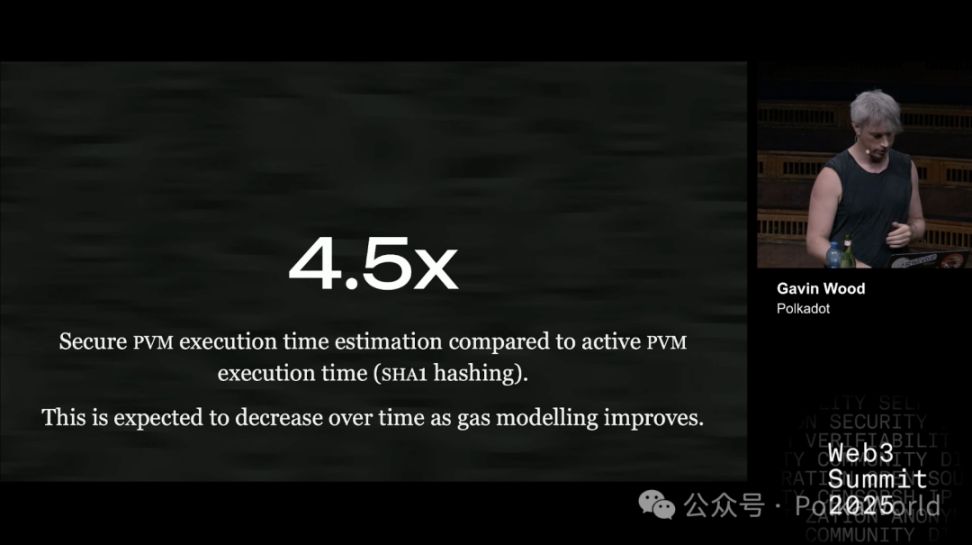

So how accurate are our worst-case estimates now?

Take SHA-1 hashing as an example. The current result is: to ensure security, we must assume it could be 4.5 times slower than normal. That is, if a piece of code normally takes 1 second, in the worst-case estimate, we must treat it as 4.5 seconds. Only then can we ensure that even the most malicious attacker cannot slow it down further.

This “multiple insurance” approach is exactly what we need to ensure safety under consensus mechanisms with time constraints.

In the future, this multiple should be able to decrease, meaning the estimates will become more accurate and efficient. Right now, 4.5 times is the best result we got after a week or two of effort. Optimistically, it might drop to about 3 times in the future, but not much lower.

33 Times Recomputation vs Mathematical Proof: The Real Cost of Two Security Modes

In Polkadot and JAM, we use a protocol called elves to ensure computational security. Its function is to let us determine that a certain computation has indeed been executed correctly.

Essentially, elves and zero-knowledge proofs (ZK) are somewhat similar:

- ZK uses mathematical proofs to give you a “hard guarantee” directly;

- Elves is more like a cryptoeconomic game: participants use signatures and some rules to prove the result is correct, assuming that “bad actors do not exceed one-third.”

When running elves, computations are repeated. Participants randomly decide whether to do this “recomputation.”

The result: in this mode, work is redone about 33 times on average. So its cost is about 33 times that of normal execution.

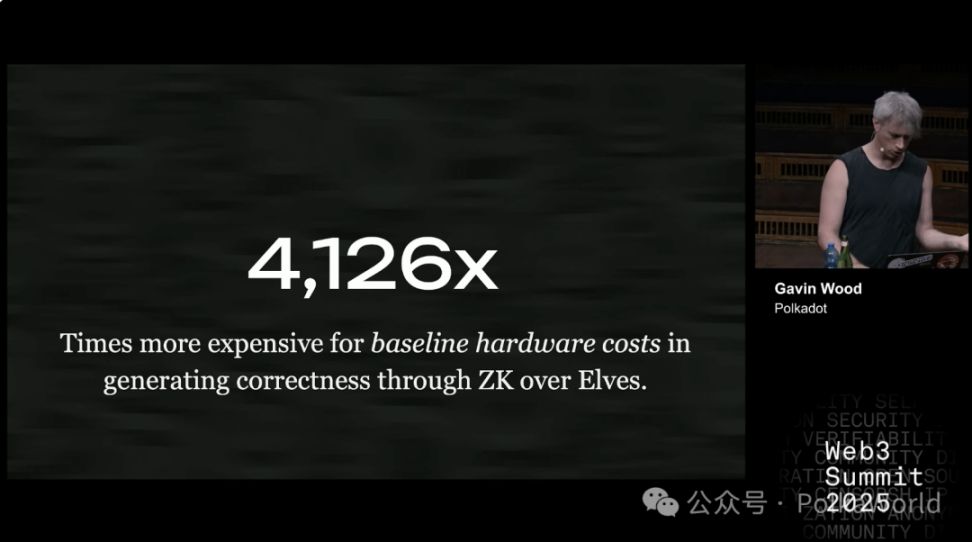

This allows us to calculate the cost difference between ZK and elves. The answer: ZK is about 4,000 times more expensive than elves. In other words, using zero-knowledge proofs to verify correctness is much more expensive than using the elves cryptoeconomic system. You can think of this as a cost comparison between different Rollup solutions.

PolkaWorld note: You can imagine elves as 33 classmates all copying homework and then checking answers to confirm there are no mistakes; ZK is like hiring a math PhD to write you a “guaranteed correct proof,” but the PhD might take days to write it.

This 4,000 times gap is huge. For ZK to become cost-effective in practice, its cost must drop significantly. Of course, we can also continue to optimize elves to make it more efficient.

However, the cost issue is not just about hardware. There are several key points:

- Sysadmin cost: No matter what hardware you run, sysadmin salaries are about the same. In many cases, sysadmin costs are even higher than hardware.

- Staking cost: To ensure bad actors do not exceed one-third, the system needs a filtering mechanism. In Polkadot, this is achieved through a “staking + slashing mechanism.” That is, participants must stake some funds (risk capital), so that “good validators” and possible “bad validators” can be distinguished.

The problem is: staking itself is expensive, which is another extra cost (I’ll elaborate on this later).

In contrast, ZK itself does not have the burden of staking. ZK logic is simple: either the proof is correct, or it’s not—it’s obvious at a glance.

But the problem is, ZK proof generation is too slow. If you run it on a single GPU, it may take hours; running the same computation directly on PVM (or a regular CPU) only takes milliseconds to seconds. The difference is obvious.

However, some have demonstrated that GPU cluster parallelization can reduce latency. If enough GPUs are connected, latency can be reduced. But the problem is:

The efficiency coefficient of parallelization is opaque: that is, how much the cost increases is unclear. Those who have experimented have not published this data—they may not want to. So we either secretly design experiments to measure it ourselves, develop our own code, or look for undiscovered related research.

In addition, there are verification and settlement issues.

For example, verifying on Ethereum L1 is currently even more expensive than generating the proof. We estimated that generating a proof costs about $1 to $1.20, but verifying on Ethereum L1 costs $1.25. Of course, if you have your own chain, verification may be much cheaper, but you still need:

- Verification

- Settlement

- Finality

- Storage

ZK cannot eliminate these steps. So in the end, you still need to ensure that malicious participants do not exceed one-third, which means you still need staking, just like Ethereum L1, Polkadot, and most chains.

How Much Does a ZK-JAM Node Cost? The Answer Is 10 Times More Expensive Than You Think!

Now, let’s think from another angle: suppose there is a ZK-JAM guarantor node—what is its operating cost?

Let me briefly explain: in JAM, there is a role called guarantors—they are like the “gatekeepers” of the system. All transactions or tasks are first handed to them; they perform the computation, package the results, and then hand them to other validators. Validators may or may not recheck their results.

Now let’s assume a scenario:

- Remove the rechecking step (no longer require others to repeat the guarantor’s work);

- Lower the staking requirement (because you don’t fully rely on the guarantor’s credibility);

- But require guarantors to run GPU clusters and use ZK to generate proofs.

So, what is the cost of doing this?

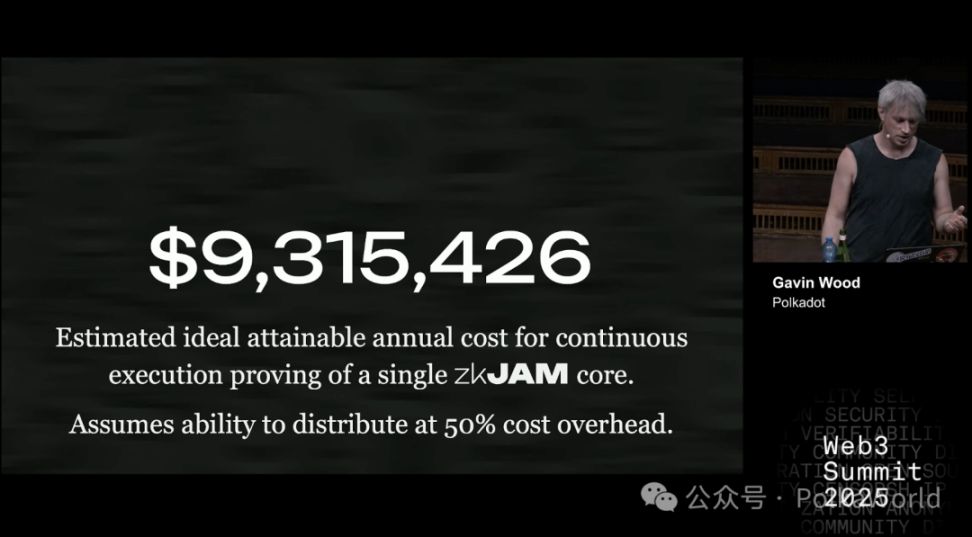

According to estimates: generating a ZK proof costs about $1.18 (using SHA-1 as an example, equivalent to 6 seconds of computation and 12MB of I/O). This is roughly the amount of work a JAM core can complete in one slot. JAM has a total of 341 cores, and this is the cost per core.

Of course, this is just a rough estimate. The cost will vary for different tasks: for other computations, it may be more or less than $1.18, but it’s roughly this magnitude.

If we annualize it: the yearly cost per core is about $9.5 million.

This assumes that GPU cluster parallelization brings a 50% extra overhead, mainly to reduce latency. However, this 50% is just a guess—in reality, it could be only 5%, or as high as 200%. What is certain is—there will definitely be extra overhead, and it could be significant.

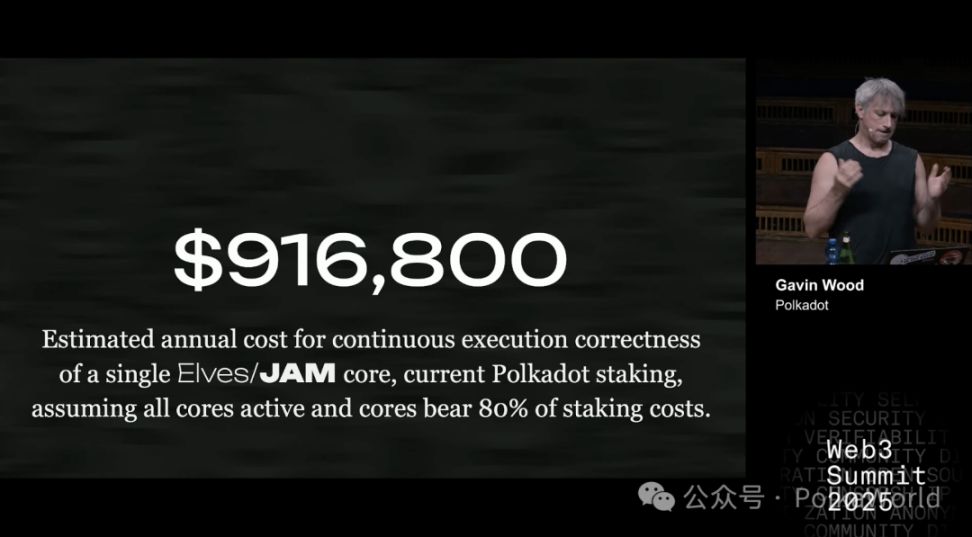

So, how does this compare to Polkadot’s current staking mechanism?

Under the current mechanism, to provide security equivalent to elves (or about 80% of elves’ security), the cost per core is less than $1 million.

The 80% here is because: even if you switch to ZK, you still need some staking to ensure the security of other key parts, such as:

- Main chain operation

- Settlement

- Finality

- Storage

All these are important, but computational correctness is the core, and it accounts for about 80% of the staking cost.

Suppose we run 341 cores and maintain the current Polkadot staking economic model, the cost is as shown. If the number of cores decreases, the cost per core actually rises, because the “total pot” of staking remains the same but is shared among fewer people.

So, to summarize: currently, the cost of ZK is about 10 times that of elves.

Of course, if we can lower the security cost (which I think is possible), for example, from $9.16 million to $2.7 million, or even further down to $1.44 million with new mechanisms we are developing, the cost gap between ZK and elves will narrow. But note, $1.44 million is already a relatively optimistic estimate.

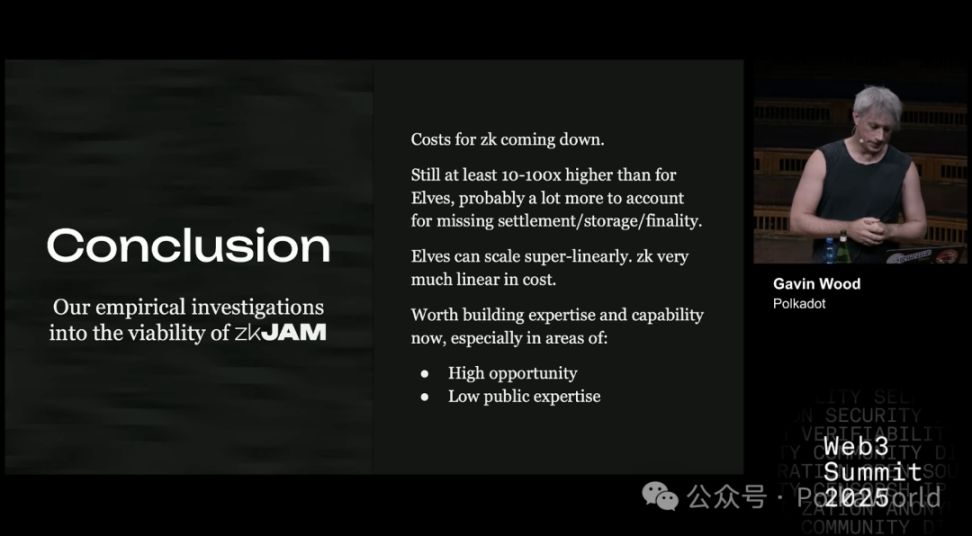

So, what’s the final conclusion?

The cost of ZK is indeed decreasing, but even so, it is still about 10 to 100 times more expensive than elves. Moreover, there are some additional uncertain costs, such as settlement, storage, and finality—these are already natively supported by JAM or can be leveraged by elves, but not by ZK.

In addition, elves have an advantage: they can scale superlinearly. This means you can connect multiple JAM networks and let them share the same validator set, making the overall efficiency higher. ZK does not have this capability—it can only scale linearly. If you want to generate a proof for another core, you must pay the same cost again, with no merging or reuse possible.

The Short-, Mid-, and Long-Term Evolution Paths of ZK in JAM

So, from a strategic perspective, which path to take depends on the specific situation.

I think a reasonable strategy is:

- Reduce proving costs: they need to drop by at least 1–2 orders of magnitude. Based on past experience, this may take 18 months to 5 years.

- There must be open-source tools: ones that can efficiently generate proofs in a distributed manner on GPU clusters. Currently, there are no mature tools, or at least not the fastest and best. Without such tools, our current cost estimates are not reliable.

- Core price issue: If the market price of a core already falls within a range that makes the elves mode reasonable, then ZK’s advantage disappears.

- Security choice: The market needs to distinguish between two types of security: ZK provides “perfect security,” while elves provide “economically constrained security.” The question is, does the market really care which is used? That’s still uncertain.

- Remove high staking dependency: We must be able to complete other tasks handled by JAM/elves, such as storage, settlement, and finality, without relying on large-scale staking. If we still have to rely on massive staking as we do now, there is no advantage—ZK solutions will only be more expensive.

Based on this, my suggested ZK strategy is:

- Start with easy-to-try directions: for example, develop a ZK-JAM service framework, but still use the existing JAM cryptoeconomic mechanism (elves) for security.

- Leverage JAM’s strengths: a JAM core has strong computational power (CPU) and decent I/O (12MB), and PVM execution efficiency is high. This means we can do a lot of ZK verification directly in the JAM core, without the need for the expensive and complex external proof process.

- Optimize the proving step: traditional ZK proving usually has several stages, with a final “proof compression” step to make the proof smaller and easier to verify. But in the JAM core, since the computing power is strong, this step may not be necessary, saving costs.

- Prioritize storage proofs: because JAM cores have strong computing power but relatively limited I/O, storage proofs can make up for this weakness and allow the system to process large numbers of transactions quickly.

- Other simple tasks: such as signature verification, are already easy to accomplish and are not bottlenecks.

In other words, the real challenge is ensuring that the data relied on by transactions is correct. This is the key problem we need to solve.

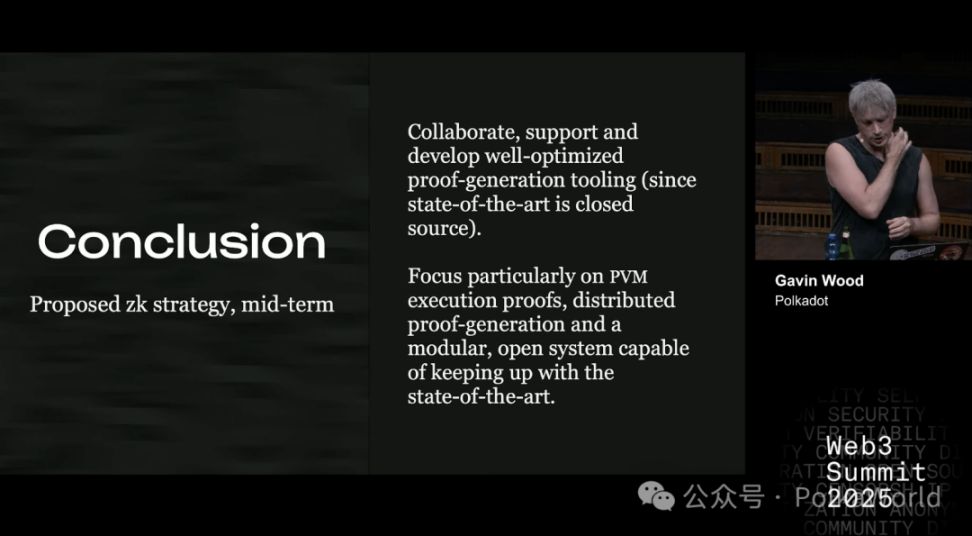

In the mid-term, a reasonable approach is:

We already have a brand new Kusama vision—to build a ZK-enabled network. So, using this budget and collaborating with other teams to focus on efficient, distributed proof generation tools is very appropriate.

- If no team is working on this now, then start a new project directly;

- If there is already a team working on it, or willing to pivot to it, then collaborate with them and support them to do it well.

In particular, focus on PVM execution proofs, because this is the key to keeping ZK-JAM compatible with regular JAM in the future, and distributed proof generation is essential.

The goal is to keep the system modular and open, so it can keep up with cutting-edge research. Only by keeping up with technological advances can we reduce the cost of proofs by several more orders of magnitude and make them truly commercially viable.

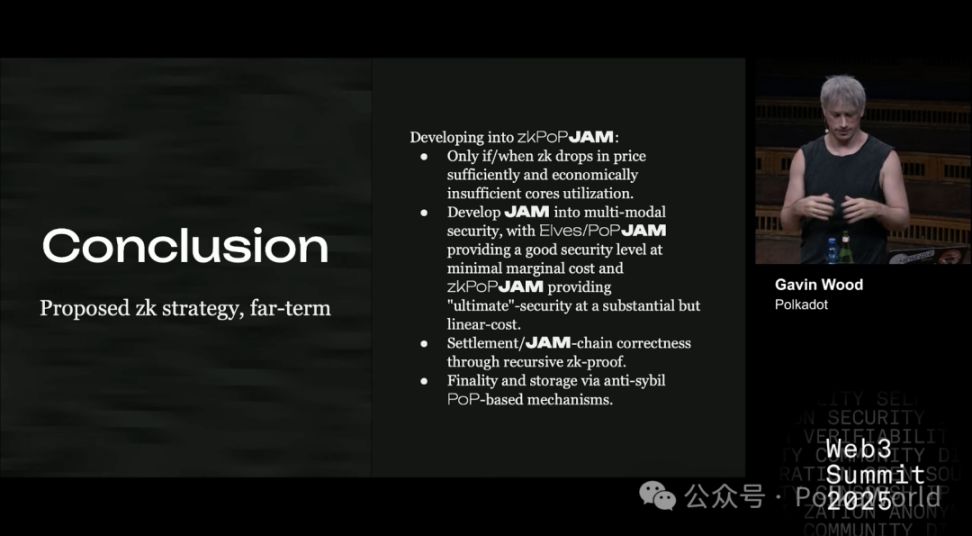

In the long term, if we really want ZK to become the core solution, we must find a way to replace staking. Because as long as staking exists, costs will remain very high.

So, how do we achieve a fully ZK-based JAM?

First, this is only worth doing if ZK costs are low enough and it is clear that core utilization is not economically viable under the current model. This cannot be determined yet, so it is a conditional assumption.

Once the conditions are met, we can evolve JAM into a multi-mode security model:

- On one hand, provide cheap but limited security (like elves, low cost);

- On the other hand, provide expensive but stronger perfect security (relying on ZK, with linearly increasing costs).

The key issue is: we must find a way to achieve finality and storage without relying on staking.

One possible direction is Proof of Personhood. If this mechanism can be integrated into the core protocol, it can greatly improve efficiency and capital utilization.

However, to achieve this, there must be a very strong anti-sybil mechanism. Most current solutions are not strong enough—they either rely on some authority or have an organization collect user data to decide who is real and who is not. This approach is obviously centralized, and only a very few solutions are close to feasible.

Disclaimer: The content of this article solely reflects the author's opinion and does not represent the platform in any capacity. This article is not intended to serve as a reference for making investment decisions.

You may also like

The Lone Warrior of Crypto Compliance: How Circle CEO Breaks Through the Double Squeeze of Tether Encirclement and Declining Interest Rates?

Circle's upcoming financial report will be a new opportunity for it to prove the effectiveness of its strategy.

One Month of Plunge After 10.11: Whale Games and Capital Outflows

Morgan Stanley says it’s harvest time as Bitcoin enters ‘fall’ season