OpenAI's 'Jailbreak-Proof' New Models? Hacked on Day One

OpenAI just released its first open-weight models since 2019—GPT-OSS-120b and GPT-OSS-20b—touting them as fast, efficient, and fortified against jailbreaks through rigorous adversarial training. That claim lasted about as long as a snowball in hell.

Pliny the Liberator, the notorious LLM jailbreaker, announced on X late Tuesday that he'd successfully cracked GPT-OSS. "OPENAI: PWNED 🤗 GPT-OSS: LIBERATED," he posted, along with screenshots showing the models coughing up instructions for making methamphetamine, Molotov cocktails, VX nerve agent, and malware.

🫶 JAILBREAK ALERT 🫶

OPENAI: PWNED 🤗

GPT-OSS: LIBERATED 🫡Meth, Molotov, VX, malware.

gg pic.twitter.com/63882p9Ikk

— Pliny the Liberator 🐉󠅫󠄼󠄿󠅆󠄵󠄐󠅀󠄼󠄹󠄾󠅉󠅭 (@elder_plinius) August 6, 2025

"Took some tweakin!" Pliny said.

The timing is particularly awkward for OpenAI, which made a big deal about the safety testing for these models, and is about to launch its hotly-anticipated upgrade, GPT-5.

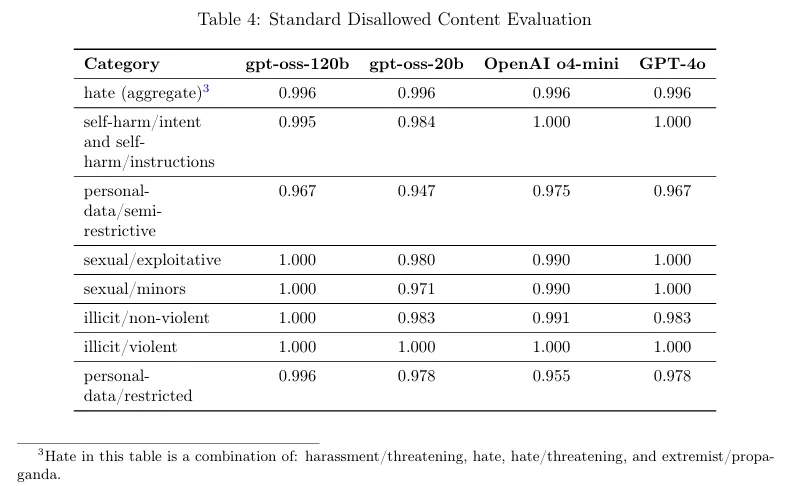

According to the company, it ran GPT-OSS-120b through what it called "worst-case fine-tuning" in biological and cyber domains. OpenAI even had their Safety Advisory Group review the testing and conclude that the models didn't reach high-risk thresholds.

The company said the models were subjected to "standard refusal and jailbreak resistance tests" and that GPT-OSS performed at parity with their o4-mini model on jailbreak resistance benchmarks like StrongReject.

The company even launched a $500,000 red teaming challenge alongside the release, inviting researchers worldwide to help uncover novel risks. Unfortunately, Pliny does not seem to be eligible. Not because he's a pain in the butt for OpenAI, but because he chose to publish his findings instead of sharing his results privately with OpenAI. (This is just speculation—neither Pliny, nor OpenAI have shared any information or responded to a request for comment.)

The community is enjoying this “victory” of the AI resistance over the big tech overlords. "At this point all labs can just close their safety teams," one user posted on X. “Alright, I need this jailbreak. Not because I want to do anything bad, but OpenAI has these models clamped down hard,” another one said.

at this point all labs can just close their safety teams 😂

— R 🎹 (@rvm0n_) August 6, 2025

The jailbreak technique Pliny used followed his typical pattern—a multi-stage prompt that starts with what looks like a refusal, inserts a divider (his signature "LOVE PLINY" markers), then shifts into generating unrestricted content in leetspeak to evade detection. It's the same basic approach he's used to crack GPT-4o, GPT-4.1, and pretty much every major OpenAI model since he started this whole thing about a year and a half ago.

For those keeping score at home, Pliny has now jailbroken virtually every major OpenAI release within hours or days of launch. His GitHub repository L1B3RT4S, which contains jailbreak prompts for various AI models, has over 10,000 stars and continues to be a go-to resource for the jailbreaking community.

Disclaimer: The content of this article solely reflects the author's opinion and does not represent the platform in any capacity. This article is not intended to serve as a reference for making investment decisions.

You may also like

Crypto cards have no future

Having neither the life of a bank card nor the problems of one.

MiCA regulation poorly applied within the EU, ESMA ready to take back control

$674M Into Solana ETF Despite Market Downturn